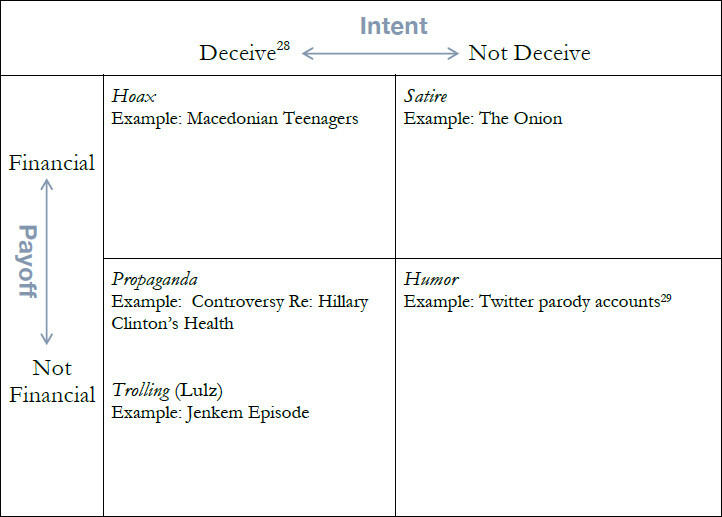

This report identifies several distinct types of fake news, based on two defining features: intention to deceive readers and financial or non-financial motivations, as follows:

- satire: purposefully false content, financially motivated, not intended to deceive readers

- hoax: purposefully false content, financially motivated, intended to deceive readers

- propaganda: purposefully biased or false content, motivated by an attempt to promote a political cause or point of view, intended to deceive the reader

- trolling: biased or fake content, motivated by an attempt to get personal humor value (the lulz), intended to deceive the reader

However, even if the definitions are clear, in practice intentions, motivations, and even fact and fiction may be mixed, creating grey areas. It is often not possible to assess the actual intention (subjective intent), so regulators may base the decision on the objective intent, namely format and content of the article. Even if a website includes a satire disclaimer, readers often read only the headlines, so they may never have a chance to see the disclaimer. Solutions to hoaxes should not target satire.

The report discusses several kinds of solutions. Those based on law may contrast with freedom of expression. Removing liability shields may be dangerous. The report suggests instead that a solution may be expanding legal protections for Internet platforms, to encourage them to pursue editorial functions. Propaganda is rarely entirely false, so targeting false content may not help. An example of market based solution is Google's announcement that it would ban websites that publish fake news articles from using AdSense. This may be effective for hoaxes. but not for propaganda and trolling, and risks including satire websites. Platforms not relying on online advertising may be a solution. A trusted media entity like the BBC could create a social network leveraging its media expertise to assess news content. Behaviour can be constrained through code and architecture, but this cannot distinguish satire and hoax, and cannot help with propaganda. We should encourage platforms to experiment with technical solutions to identify and flag fake news, but code will make mistakes. Social norms constrain behaviour by pressuring individuals to conform to certain standards and practices of conduct. Seana Shiffrin advocates for a norm of sincerity to govern our speech with others, but norms are usually not the result of design and planning, they instead arise organically. They are often nebulous and diverse. We could encourage platforms to use their own powerful voices to criticise inaccurate information. Norms could add disclaimer to false content without censoring it. However platforms have been reluctant to take a position even in clear fake news cases. Also, this works best (and perhaps only) for clearly false stories.

Tags: Fake news and disinformation Media freedom Freedom of expression Social mediaThe content of this article can be used according to the terms of Creative Commons: Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) . To do so use the the wording "this article was originally published on the Resource Centre on Media Freedom in Europe" including a direct active link to the original article page.